Blog

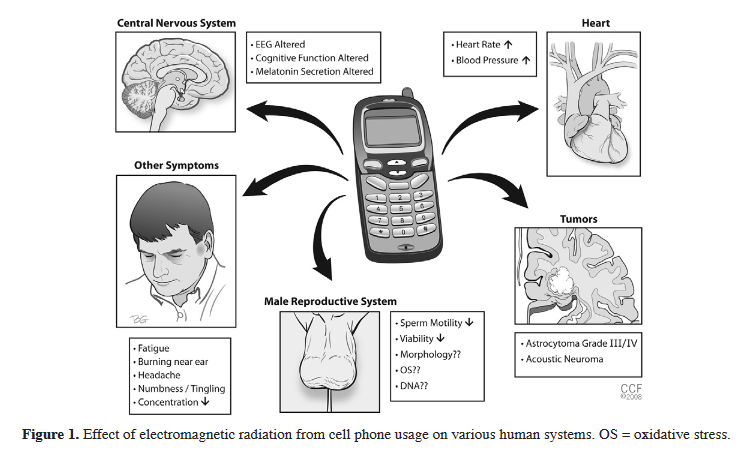

Effects of electromagnetic radiation from cell phone usage on various human systems

Image source:

Makker, K., Varghese, A., Desai, N. R., Mouradi, R., & Agarwal, A. (2009). Cell phones: Modern man’s nemesis? Reproductive BioMedicine Online. https://doi.org/10.1016/S1472-6483(10)60437-3

“Fine print may protect manufacturers legally. Let’s protect consumers in reality: Put the cell phone safety warnings up front, where we can see them.”

http://showthefineprint.org

Protect yourself: http://showthefineprint.org/protect-yourself

Further References

Plain numerical DOI: 10.1016/S1472-6483(10)60437-3

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1002/bem.20386

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1109/GSIS.2011.6044119

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1080/09553000600840922

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1186/2052-336X-12-75

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.3969/j.issn.1673-8225.2009.30.031

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1016/j.brainres.2015.01.019

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1016/j.pathophys.2009.11.002

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1016/j.envint.2015.09.025

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.4103/0971-6866.16810

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1002/jcp.20327

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.3109/10715762.2014.888717

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1007/s11011-017-0180-4

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1016/j.mrgentox.2005.03.006

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1371/journal.pone.0006446

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1046/j.1432-0436.2002.700207.x

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1046/j.1432-0436.2002.700207.x

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1007/s12013-013-9715-4

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1016/j.biopha.2007.12.004

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1001/jama.2011.201

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1109/INNOVATIONS.2008.4781774

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 45/835 [pii]

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1002/bem.10068

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.1016/j.mrgentox.2006.08.008

DOI URL

directSciHub download

Show/hide publication abstract

Text-to-Speech synthesis algorithm

Typography & processing fluency

Typeface matters. The ease of information processing effect the way the percipient evaluates information. Therefore, typography is of crucial importance for effective webdesign.

Typeface matters. The ease of information processing effect the way the percipient evaluates information. Therefore, typography is of crucial importance for effective webdesign.

Processing fluency is the ease with which information is processed. Perceptual fluency is the ease of processing stimuli based on manipulations to perceptual quality. Retrieval fluency is the ease with which information can be retrieved from memory.

Source URL: https://en.wikipedia.org/wiki/Processing_fluency

References

, 8(4), 364–382.

Plain numerical DOI: 10.1207/s15327957pspr0804_3

DOI URL

directSciHub download

Show/hide publication abstract

Plain numerical DOI: 10.2139/ssrn.967768

DOI URL

directSciHub download

Show/hide publication abstract

, 36(5), 876–889.

Plain numerical DOI: 10.1086/612425

DOI URL

directSciHub download

Show/hide publication abstract

Face recognition software

A facial recognition system is a computer application capable of identifying or verifying a person from a digital image or a video frame from a video source. One of the ways to do this is by comparing selected facial features from the image and a face database.

https://github.com/wesbos/HTML5-Face-Detection

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

<!DOCTYPE html> <html> <head> <meta http-equiv="Content-Type" content="text/html; charset=UTF-8"> <title>Face Detector</title> <link rel="stylesheet" href="style.css"/> </head> <body> <div class="wrapper"> <h1>HTML5 GLASSES</h1> <p>Created by <a href="http://twitter.com/wesbos" target="_blank" rel="noopener noreferrer">Wes Bos</a>. See full details <a href="">here.</a></p> <!-- Our Main Video Element --> <video height="426" width="640" controls="false"> <source src="videos/wes4.ogg" /> <source src="videos/wes4.mp4" /> </video> <!-- Out Canvas Element for output --> <canvas id="output" height="426" width="640" ></canvas> <!-- div to track progress --> <div id="elapsed_time">Press play for HTML5 Glasses!</div> </div> <script type="text/javascript" src="scripts/ccv.js"></script> <script type="text/javascript" src="scripts/face.js"></script> <script type="text/javascript" src="scripts/scripts.js"></script> </body> </html> |

Cybernetics and webdesign

Cybernetics is a transdisciplinary approach for exploring regulatory systems—their structures, constraints, and possibilities. Norbert Wiener defined cybernetics in 1948 as “the scientific study of control and communication in the animal and the machine.” In other words, it is the scientific study of how humans, animals and machines control and communicate with each other.

Cybernetics is applicable when a system being analyzed incorporates a closed signaling loop—originally referred to as a “circular causal” relationship—that is, where action by the system generates some change in its environment and that change is reflected in the system in some manner (feedback) that triggers a system change. Cybernetics is relevant to, for example, mechanical, physical, biological, cognitive, and social systems. The essential goal of the broad field of cybernetics is to understand and define the functions and processes of systems that have goals and that participate in circular, causal chains that move from action to sensing to comparison with desired goal, and again to action. Its focus is how anything (digital, mechanical or biological) processes information, reacts to information, and changes or can be changed to better accomplish the first two tasks. Cybernetics includes the study of feedback, black boxes and derived concepts such as communication and control in living organisms, machines and organizations including self-organization.

WebGL Interactive Physics Engine

Click with your mouse to interact with the application…

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 |

<html lang="en"> <head> <title>Ammo.js softbody volume demo</title> <meta charset="utf-8"> <meta name="viewport" content="width=device-width, user-scalable=no, minimum-scale=1.0, maximum-scale=1.0"> <link type="text/css" rel="stylesheet" href="main.css"> <style> body { color: #333; } </style> </head> <body> <div id="info"> Ammo.js physics soft body volume demo<br/> Click to throw a ball </div> <div id="container"></div> <script src="../build/three.js"></script> <script src="js/libs/ammo.js"></script> <script src="js/controls/OrbitControls.js"></script> <script src="js/utils/BufferGeometryUtils.js"></script> <script src="js/WebGL.js"></script> <script src="js/libs/stats.min.js"></script> <script> if ( WEBGL.isWebGLAvailable() === false ) { document.body.appendChild( WEBGL.getWebGLErrorMessage() ); } // Graphics variables var container, stats; var camera, controls, scene, renderer; var textureLoader; var clock = new THREE.Clock(); var clickRequest = false; var mouseCoords = new THREE.Vector2(); var raycaster = new THREE.Raycaster(); var ballMaterial = new THREE.MeshPhongMaterial( { color: 0x202020 } ); var pos = new THREE.Vector3(); var quat = new THREE.Quaternion(); // Physics variables var gravityConstant = - 9.8; var physicsWorld; var rigidBodies = []; var softBodies = []; var margin = 0.05; var transformAux1; var softBodyHelpers; Ammo().then( function( AmmoLib ) { Ammo = AmmoLib; init(); animate(); } ); function init() { initGraphics(); initPhysics(); createObjects(); initInput(); } function initGraphics() { container = document.getElementById( 'container' ); camera = new THREE.PerspectiveCamera( 60, window.innerWidth / window.innerHeight, 0.2, 2000 ); scene = new THREE.Scene(); scene.background = new THREE.Color( 0xbfd1e5 ); camera.position.set( - 7, 5, 8 ); renderer = new THREE.WebGLRenderer(); renderer.setPixelRatio( window.devicePixelRatio ); renderer.setSize( window.innerWidth, window.innerHeight ); renderer.shadowMap.enabled = true; container.appendChild( renderer.domElement ); controls = new THREE.OrbitControls( camera, renderer.domElement ); controls.target.set( 0, 2, 0 ); controls.update(); textureLoader = new THREE.TextureLoader(); var ambientLight = new THREE.AmbientLight( 0x404040 ); scene.add( ambientLight ); var light = new THREE.DirectionalLight( 0xffffff, 1 ); light.position.set( - 10, 10, 5 ); light.castShadow = true; var d = 20; light.shadow.camera.left = - d; light.shadow.camera.right = d; light.shadow.camera.top = d; light.shadow.camera.bottom = - d; light.shadow.camera.near = 2; light.shadow.camera.far = 50; light.shadow.mapSize.x = 1024; light.shadow.mapSize.y = 1024; scene.add( light ); stats = new Stats(); stats.domElement.style.position = 'absolute'; stats.domElement.style.top = '0px'; container.appendChild( stats.domElement ); window.addEventListener( 'resize', onWindowResize, false ); } function initPhysics() { // Physics configuration var collisionConfiguration = new Ammo.btSoftBodyRigidBodyCollisionConfiguration(); var dispatcher = new Ammo.btCollisionDispatcher( collisionConfiguration ); var broadphase = new Ammo.btDbvtBroadphase(); var solver = new Ammo.btSequentialImpulseConstraintSolver(); var softBodySolver = new Ammo.btDefaultSoftBodySolver(); physicsWorld = new Ammo.btSoftRigidDynamicsWorld( dispatcher, broadphase, solver, collisionConfiguration, softBodySolver ); physicsWorld.setGravity( new Ammo.btVector3( 0, gravityConstant, 0 ) ); physicsWorld.getWorldInfo().set_m_gravity( new Ammo.btVector3( 0, gravityConstant, 0 ) ); transformAux1 = new Ammo.btTransform(); softBodyHelpers = new Ammo.btSoftBodyHelpers(); } function createObjects() { // Ground pos.set( 0, - 0.5, 0 ); quat.set( 0, 0, 0, 1 ); var ground = createParalellepiped( 40, 1, 40, 0, pos, quat, new THREE.MeshPhongMaterial( { color: 0xFFFFFF } ) ); ground.castShadow = true; ground.receiveShadow = true; textureLoader.load( "textures/grid.png", function ( texture ) { texture.wrapS = THREE.RepeatWrapping; texture.wrapT = THREE.RepeatWrapping; texture.repeat.set( 40, 40 ); ground.material.map = texture; ground.material.needsUpdate = true; } ); // Create soft volumes var volumeMass = 15; var sphereGeometry = new THREE.SphereBufferGeometry( 1.5, 40, 25 ); sphereGeometry.translate( 5, 5, 0 ); createSoftVolume( sphereGeometry, volumeMass, 250 ); var boxGeometry = new THREE.BoxBufferGeometry( 1, 1, 5, 4, 4, 20 ); boxGeometry.translate( - 2, 5, 0 ); createSoftVolume( boxGeometry, volumeMass, 120 ); // Ramp pos.set( 3, 1, 0 ); quat.setFromAxisAngle( new THREE.Vector3( 0, 0, 1 ), 30 * Math.PI / 180 ); var obstacle = createParalellepiped( 10, 1, 4, 0, pos, quat, new THREE.MeshPhongMaterial( { color: 0x606060 } ) ); obstacle.castShadow = true; obstacle.receiveShadow = true; } function processGeometry( bufGeometry ) { // Ony consider the position values when merging the vertices var posOnlyBufGeometry = new THREE.BufferGeometry(); posOnlyBufGeometry.addAttribute( 'position', bufGeometry.getAttribute( 'position' ) ); posOnlyBufGeometry.setIndex( bufGeometry.getIndex() ); // Merge the vertices so the triangle soup is converted to indexed triangles var indexedBufferGeom = THREE.BufferGeometryUtils.mergeVertices( posOnlyBufGeometry ); // Create index arrays mapping the indexed vertices to bufGeometry vertices mapIndices( bufGeometry, indexedBufferGeom ); } function isEqual( x1, y1, z1, x2, y2, z2 ) { var delta = 0.000001; return Math.abs( x2 - x1 ) < delta && Math.abs( y2 - y1 ) < delta && Math.abs( z2 - z1 ) < delta; } function mapIndices( bufGeometry, indexedBufferGeom ) { // Creates ammoVertices, ammoIndices and ammoIndexAssociation in bufGeometry var vertices = bufGeometry.attributes.position.array; var idxVertices = indexedBufferGeom.attributes.position.array; var indices = indexedBufferGeom.index.array; var numIdxVertices = idxVertices.length / 3; var numVertices = vertices.length / 3; bufGeometry.ammoVertices = idxVertices; bufGeometry.ammoIndices = indices; bufGeometry.ammoIndexAssociation = []; for ( var i = 0; i < numIdxVertices; i ++ ) { var association = []; bufGeometry.ammoIndexAssociation.push( association ); var i3 = i * 3; for ( var j = 0; j < numVertices; j ++ ) { var j3 = j * 3; if ( isEqual( idxVertices[ i3 ], idxVertices[ i3 + 1 ], idxVertices[ i3 + 2 ], vertices[ j3 ], vertices[ j3 + 1 ], vertices[ j3 + 2 ] ) ) { association.push( j3 ); } } } } function createSoftVolume( bufferGeom, mass, pressure ) { processGeometry( bufferGeom ); var volume = new THREE.Mesh( bufferGeom, new THREE.MeshPhongMaterial( { color: 0xFFFFFF } ) ); volume.castShadow = true; volume.receiveShadow = true; volume.frustumCulled = false; scene.add( volume ); textureLoader.load( "textures/colors.png", function ( texture ) { volume.material.map = texture; volume.material.needsUpdate = true; } ); // Volume physic object var volumeSoftBody = softBodyHelpers.CreateFromTriMesh( physicsWorld.getWorldInfo(), bufferGeom.ammoVertices, bufferGeom.ammoIndices, bufferGeom.ammoIndices.length / 3, true ); var sbConfig = volumeSoftBody.get_m_cfg(); sbConfig.set_viterations( 40 ); sbConfig.set_piterations( 40 ); // Soft-soft and soft-rigid collisions sbConfig.set_collisions( 0x11 ); // Friction sbConfig.set_kDF( 0.1 ); // Damping sbConfig.set_kDP( 0.01 ); // Pressure sbConfig.set_kPR( pressure ); // Stiffness volumeSoftBody.get_m_materials().at( 0 ).set_m_kLST( 0.9 ); volumeSoftBody.get_m_materials().at( 0 ).set_m_kAST( 0.9 ); volumeSoftBody.setTotalMass( mass, false ); Ammo.castObject( volumeSoftBody, Ammo.btCollisionObject ).getCollisionShape().setMargin( margin ); physicsWorld.addSoftBody( volumeSoftBody, 1, - 1 ); volume.userData.physicsBody = volumeSoftBody; // Disable deactivation volumeSoftBody.setActivationState( 4 ); softBodies.push( volume ); } function createParalellepiped( sx, sy, sz, mass, pos, quat, material ) { var threeObject = new THREE.Mesh( new THREE.BoxBufferGeometry( sx, sy, sz, 1, 1, 1 ), material ); var shape = new Ammo.btBoxShape( new Ammo.btVector3( sx * 0.5, sy * 0.5, sz * 0.5 ) ); shape.setMargin( margin ); createRigidBody( threeObject, shape, mass, pos, quat ); return threeObject; } function createRigidBody( threeObject, physicsShape, mass, pos, quat ) { threeObject.position.copy( pos ); threeObject.quaternion.copy( quat ); var transform = new Ammo.btTransform(); transform.setIdentity(); transform.setOrigin( new Ammo.btVector3( pos.x, pos.y, pos.z ) ); transform.setRotation( new Ammo.btQuaternion( quat.x, quat.y, quat.z, quat.w ) ); var motionState = new Ammo.btDefaultMotionState( transform ); var localInertia = new Ammo.btVector3( 0, 0, 0 ); physicsShape.calculateLocalInertia( mass, localInertia ); var rbInfo = new Ammo.btRigidBodyConstructionInfo( mass, motionState, physicsShape, localInertia ); var body = new Ammo.btRigidBody( rbInfo ); threeObject.userData.physicsBody = body; scene.add( threeObject ); if ( mass > 0 ) { rigidBodies.push( threeObject ); // Disable deactivation body.setActivationState( 4 ); } physicsWorld.addRigidBody( body ); return body; } function initInput() { window.addEventListener( 'mousedown', function ( event ) { if ( ! clickRequest ) { mouseCoords.set( ( event.clientX / window.innerWidth ) * 2 - 1, - ( event.clientY / window.innerHeight ) * 2 + 1 ); clickRequest = true; } }, false ); } function processClick() { if ( clickRequest ) { raycaster.setFromCamera( mouseCoords, camera ); // Creates a ball var ballMass = 3; var ballRadius = 0.4; var ball = new THREE.Mesh( new THREE.SphereBufferGeometry( ballRadius, 18, 16 ), ballMaterial ); ball.castShadow = true; ball.receiveShadow = true; var ballShape = new Ammo.btSphereShape( ballRadius ); ballShape.setMargin( margin ); pos.copy( raycaster.ray.direction ); pos.add( raycaster.ray.origin ); quat.set( 0, 0, 0, 1 ); var ballBody = createRigidBody( ball, ballShape, ballMass, pos, quat ); ballBody.setFriction( 0.5 ); pos.copy( raycaster.ray.direction ); pos.multiplyScalar( 14 ); ballBody.setLinearVelocity( new Ammo.btVector3( pos.x, pos.y, pos.z ) ); clickRequest = false; } } function onWindowResize() { camera.aspect = window.innerWidth / window.innerHeight; camera.updateProjectionMatrix(); renderer.setSize( window.innerWidth, window.innerHeight ); } function animate() { requestAnimationFrame( animate ); render(); stats.update(); } function render() { var deltaTime = clock.getDelta(); updatePhysics( deltaTime ); processClick(); renderer.render( scene, camera ); } function updatePhysics( deltaTime ) { // Step world physicsWorld.stepSimulation( deltaTime, 10 ); // Update soft volumes for ( var i = 0, il = softBodies.length; i < il; i ++ ) { var volume = softBodies[ i ]; var geometry = volume.geometry; var softBody = volume.userData.physicsBody; var volumePositions = geometry.attributes.position.array; var volumeNormals = geometry.attributes.normal.array; var association = geometry.ammoIndexAssociation; var numVerts = association.length; var nodes = softBody.get_m_nodes(); for ( var j = 0; j < numVerts; j ++ ) { var node = nodes.at( j ); var nodePos = node.get_m_x(); var x = nodePos.x(); var y = nodePos.y(); var z = nodePos.z(); var nodeNormal = node.get_m_n(); var nx = nodeNormal.x(); var ny = nodeNormal.y(); var nz = nodeNormal.z(); var assocVertex = association[ j ]; for ( var k = 0, kl = assocVertex.length; k < kl; k ++ ) { var indexVertex = assocVertex[ k ]; volumePositions[ indexVertex ] = x; volumeNormals[ indexVertex ] = nx; indexVertex ++; volumePositions[ indexVertex ] = y; volumeNormals[ indexVertex ] = ny; indexVertex ++; volumePositions[ indexVertex ] = z; volumeNormals[ indexVertex ] = nz; } } geometry.attributes.position.needsUpdate = true; geometry.attributes.normal.needsUpdate = true; } // Update rigid bodies for ( var i = 0, il = rigidBodies.length; i < il; i ++ ) { var objThree = rigidBodies[ i ]; var objPhys = objThree.userData.physicsBody; var ms = objPhys.getMotionState(); if ( ms ) { ms.getWorldTransform( transformAux1 ); var p = transformAux1.getOrigin(); var q = transformAux1.getRotation(); objThree.position.set( p.x(), p.y(), p.z() ); objThree.quaternion.set( q.x(), q.y(), q.z(), q.w() ); } } } </script> </body> </html> Source URL: https://github.com/mrdoob/three.js/blob/master/examples/webgl_physics_volume.html |

3D Webdesign: The next frontier

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 |

<!DOCTYPE html> <!DOCTYPE html> <html lang="en"> <head> <title>three.js - kinect</title> <meta charset="utf-8"> <meta name="viewport" content="width=device-width, user-scalable=no, minimum-scale=1.0, maximum-scale=1.0"> <link type="text/css" rel="stylesheet" href="main.css"> </head> <body> <script src="../build/three.js"></script> <script src='js/libs/dat.gui.min.js'></script> <script src="js/libs/stats.min.js"></script> <script src="js/WebGL.js"></script> <video id="video" loop muted crossOrigin="anonymous" webkit-playsinline style="display:none"> <source src="textures/kinect.webm"> <source src="textures/kinect.mp4"> </video> <script id="vs" type="x-shader/x-vertex"> uniform sampler2D map; uniform float width; uniform float height; uniform float nearClipping, farClipping; uniform float pointSize; uniform float zOffset; varying vec2 vUv; const float XtoZ = 1.11146; // tan( 1.0144686 / 2.0 ) * 2.0; const float YtoZ = 0.83359; // tan( 0.7898090 / 2.0 ) * 2.0; void main() { vUv = vec2( position.x / width, position.y / height ); vec4 color = texture2D( map, vUv ); float depth = ( color.r + color.g + color.b ) / 3.0; // Projection code by @kcmic float z = ( 1.0 - depth ) * (farClipping - nearClipping) + nearClipping; vec4 pos = vec4( ( position.x / width - 0.5 ) * z * XtoZ, ( position.y / height - 0.5 ) * z * YtoZ, - z + zOffset, 1.0); gl_PointSize = pointSize; gl_Position = projectionMatrix * modelViewMatrix * pos; } </script> <script id="fs" type="x-shader/x-fragment"> uniform sampler2D map; varying vec2 vUv; void main() { vec4 color = texture2D( map, vUv ); gl_FragColor = vec4( color.r, color.g, color.b, 0.2 ); } </script> <script> var container; var scene, camera, renderer; var geometry, mesh, material; var mouse, center; var stats; if ( WEBGL.isWebGLAvailable() ) { init(); animate(); } else { document.body.appendChild( WEBGL.getWebGLErrorMessage() ); } function init() { container = document.createElement( 'div' ); document.body.appendChild( container ); var info = document.createElement( 'div' ); info.id = 'info'; info.innerHTML = '<a href="http://threejs.org" target="_blank" rel="noopener noreferrer">three.js</a> - kinect'; document.body.appendChild( info ); stats = new Stats(); // container.appendChild( stats.dom ); camera = new THREE.PerspectiveCamera( 50, window.innerWidth / window.innerHeight, 1, 10000 ); camera.position.set( 0, 0, 500 ); scene = new THREE.Scene(); center = new THREE.Vector3(); center.z = - 1000; var video = document.getElementById( 'video' ); video.addEventListener( 'loadedmetadata', function () { var texture = new THREE.VideoTexture( video ); texture.minFilter = THREE.NearestFilter; var width = 640, height = 480; var nearClipping = 850, farClipping = 4000; geometry = new THREE.BufferGeometry(); var vertices = new Float32Array( width * height * 3 ); for ( var i = 0, j = 0, l = vertices.length; i < l; i += 3, j ++ ) { vertices[ i ] = j % width; vertices[ i + 1 ] = Math.floor( j / width ); } geometry.addAttribute( 'position', new THREE.BufferAttribute( vertices, 3 ) ); material = new THREE.ShaderMaterial( { uniforms: { "map": { value: texture }, "width": { value: width }, "height": { value: height }, "nearClipping": { value: nearClipping }, "farClipping": { value: farClipping }, "pointSize": { value: 2 }, "zOffset": { value: 1000 } }, vertexShader: document.getElementById( 'vs' ).textContent, fragmentShader: document.getElementById( 'fs' ).textContent, blending: THREE.AdditiveBlending, depthTest: false, depthWrite: false, transparent: true } ); mesh = new THREE.Points( geometry, material ); scene.add( mesh ); var gui = new dat.GUI(); gui.add( material.uniforms.nearClipping, 'value', 1, 10000, 1.0 ).name( 'nearClipping' ); gui.add( material.uniforms.farClipping, 'value', 1, 10000, 1.0 ).name( 'farClipping' ); gui.add( material.uniforms.pointSize, 'value', 1, 10, 1.0 ).name( 'pointSize' ); gui.add( material.uniforms.zOffset, 'value', 0, 4000, 1.0 ).name( 'zOffset' ); gui.close(); }, false ); video.play(); renderer = new THREE.WebGLRenderer(); renderer.setPixelRatio( window.devicePixelRatio ); renderer.setSize( window.innerWidth, window.innerHeight ); container.appendChild( renderer.domElement ); mouse = new THREE.Vector3( 0, 0, 1 ); document.addEventListener( 'mousemove', onDocumentMouseMove, false ); // window.addEventListener( 'resize', onWindowResize, false ); } function onWindowResize() { camera.aspect = window.innerWidth / window.innerHeight; camera.updateProjectionMatrix(); renderer.setSize( window.innerWidth, window.innerHeight ); } function onDocumentMouseMove( event ) { mouse.x = ( event.clientX - window.innerWidth / 2 ) * 8; mouse.y = ( event.clientY - window.innerHeight / 2 ) * 8; } function animate() { requestAnimationFrame( animate ); render(); stats.update(); } function render() { camera.position.x += ( mouse.x - camera.position.x ) * 0.05; camera.position.y += ( - mouse.y - camera.position.y ) * 0.05; camera.lookAt( center ); renderer.render( scene, camera ); } </script> </body> </html> Source: https://threejs.org |

Bistable perception and perceptual-shifts

Visit www.qbism.art for an art/science web-project which focuses on bistable perception from a quantum cognition perspective.

References

Plain numerical DOI: 10.1016/j.neuroimage.2015.06.053

DOI URL

directSciHub download